In the very early ages we used to say that "Information is virtue" then it changed to "Information is power". In the present age of information technology, Information is not power any more because information is every where. It is the correct and timely use of it. Just take an example, there are two recruitment agencies both have unlimited resumes of job seekers in their database. Now an organization reaches to both of them and asked them to provide list of candidate suiting a particular criteria. Now the firm which has the faster mechanism to fetch the information from the database would be able to get the contract. So in the present age, only those organizations would remain to be existent which have faster access to information. This is "Survival of the fastest" scenario for an organizational market.

Today I want to discuss many things for you to improve the performance of your SQL Server based systems.

Hardware:Use Faster Storage Technologies:It is true that by writing efficient code, we can improve the performance. But this code has to run on hardware, so by using the efficient hardware, we can improve the performance of our systems. These days, we are using multi core processors and primary memory up to many giga bits per second. But the problem is that our database systems are based on the data stored in our persistent storage drives. The maximum speed of such devices is 7200 rpm. This proves to be a bottleneck. But being a software person, we are not concerned, generally, with the speed of our hard drives. But by just using a storage which becomes more responsive to the data requirements of our system, we can improve our systems many folds.

Having discussed about this, a question pops up in our minds. Can we increase the speed of our hard drives? The answer is YES. This can be done by using the drives which have higher speeds. Gone are the days in which the organizations had to use the magnetic storage drives because of their capacity, cost and reliability. These days’ organizations are moving towards solid state options for persistent storage. This storage have more random access ability. So they can process data much faster then their magnetic counterparts.

The only problem is that, these drives are not marketed as they deserve to be. But being an enthusiast, we should dig the technology to get the best out of it.

Software:a. Efficient use of indexesFirst thing first, Index does not mean improving performance for every operation. If there are more SELECT operations in a table, use index on columns. But if there are more inserts / updates / deletes, don't consider implementing it. This is because this would further slow down the process. These operations would not only be slow but would also create fragmentation problems.

b.Computed Columns:This is another decision you have to take based on your scenarios. If you have more Insert / Updates then using computed columns would significantly reduce the performance of your system. Instead if your system involves more SELECT operations then use of computed columns would prove to be a step towards drastic performance improvement for your system.

c. Selecting the data types of columns in a tableThis aspect is sometimes ignored whenever an improvement is discussed. Always consider the following points whenever you have to select a data type of one of your column.

• You should have very good reason not to use non-Unicode VARCHAR if you have to deal with character data. The reasons may be your requirement to store data more than 8000 bytes. There may be special Unicode characters which you have to use. Otherwise, you can do very fine with this data type.

• Always remember that integer based columns are much faster to sort any result set if used in an ORDER BY clause.

• Never use Text or BigInt data types unless you need extra storage.

• Using Integer based data type for Primary key would improve the performance compared to text based or floating point based ones.

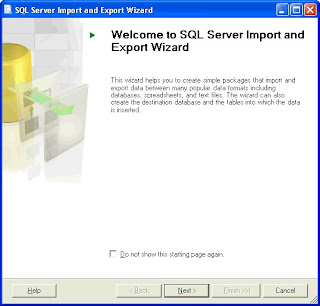

d. Bulk Insert / UpdatesWhenever Bulk Insert or updates are involved, selecting the recovery model to FULL or BULK LOGGED would slow down the process of the insert or update operation. So what you can do is that you change the recover model do your operation and then change the model back to the previous one.

Index could also create delay for these operations.

Always try to pass data as XML to your stored procedures and use OPENXML for BULK INSERT or UPDATE. This operation is then done through a single INSERT / UPDATE statement as a SET based operation. Avoid using DataAdapter's for update even if you are using updateBatchSize with it. This is because of the reasons: it is not SET based; still more than one UPDATE statements are passed to the SQL Server. This only decreases the roundtrip between your application and SQL Server. The numbers of UPDATE statements being executed on the server are the same. The maximum value for UPDATEBATCHSIZE is a limited value. But with XML option you can send the complete data of a DataTable.

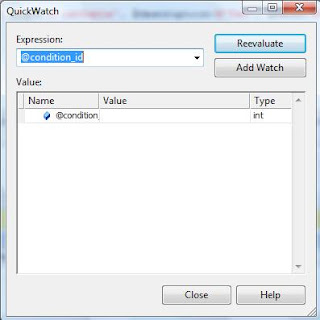

e. Parameterized queriesYou might have studied that compiled queries run faster. This is because the DBMS does not have to create a plan for the query for each operation. This also would help you as a precaution against SQL Injection attacks.

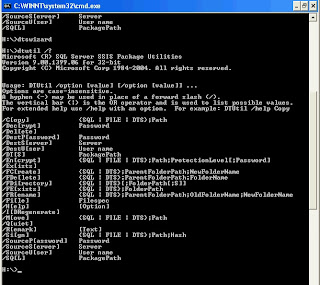

f. Stored proceduresStored procedures also help in preventing the SQL Injection attacks. They also decrease the roundtrips between application and Database Server because many statements are combined in one procedure. This makes these bunch of statements as a single transaction. There are also many other benefits. But the specified ones are enough to use them as a first priority.

g. Stop lavishing use of explicit CursorsThis has been said by so many people so many times that this repetition is certainly not very necessary. I just tell you my example. Few years back I was given a stored procedure in which the previous developer had the lavishing use of the explicitly cursors. The execution time has risen to 40 minutes then because of increase of data in the database. This was expected to increase more as the data increases. By just replacing these cursors with some SET based operations; I was able to turn that execution time to 3 seconds. This was a great achievement on my part. By just looking at this example, you can find out that use of cursor is killing for the performance of your system.